1. 概述

本文档主要介绍沐曦数据中心管理工具(MetaX Data Center Manager,MX-DCM),指导用户如何安装和使用该工具对部署在数据中心的沐曦GPU进行监控和管理。

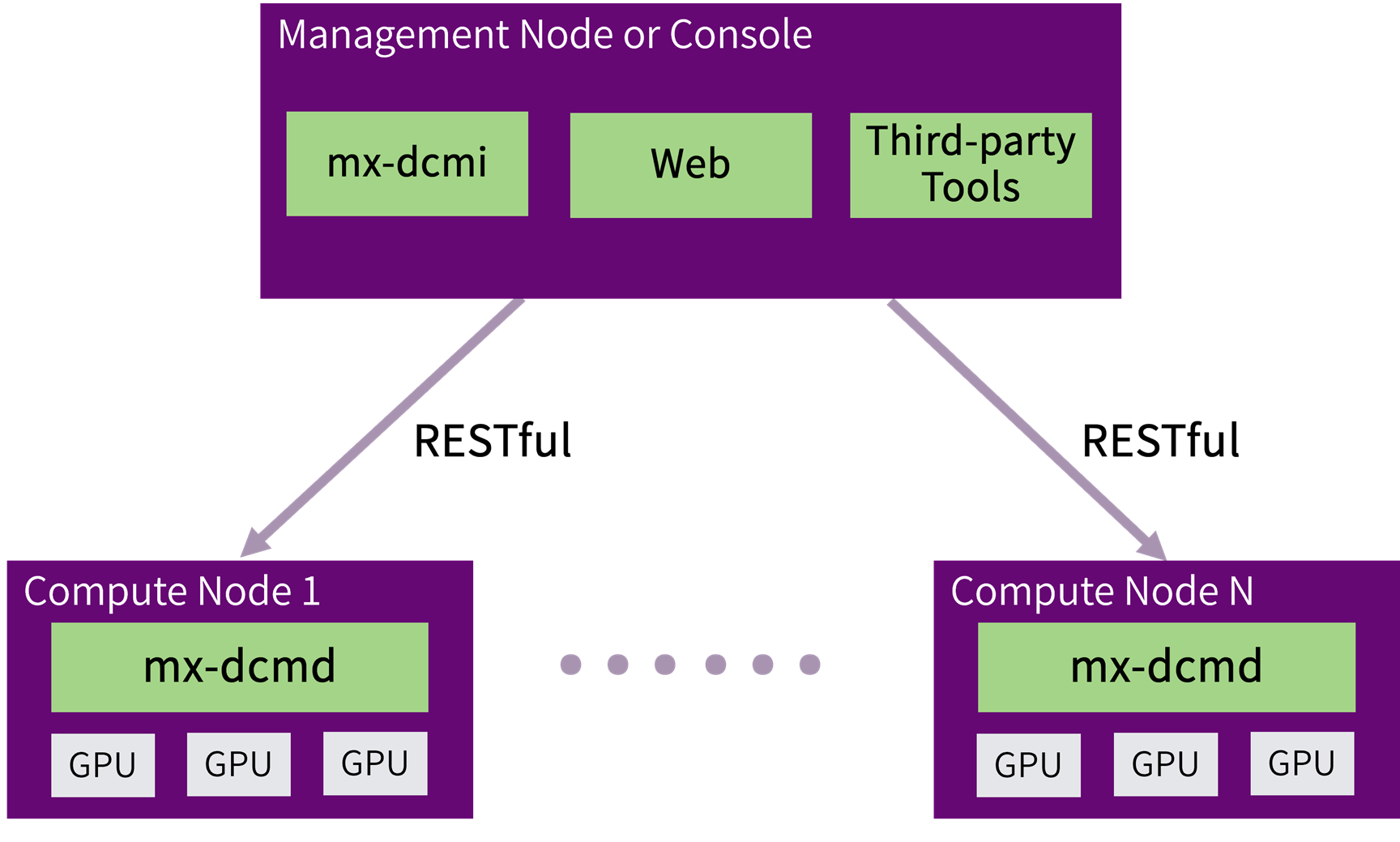

沐曦数据中心管理工具提供了对部署在集群和数据中心环境中的沐曦GPU进行管理的功能。MX-DCM概览如图 1.1 所示。

图 1.1 MX-DCM概览

对于部署沐曦GPU的主机,MX-DCM主要提供了以下功能:

设备分组管理

设备行为监控

设备数据采集

设备配置管理

设备策略设置

设备状态检查以及诊断

MX-DCM向用户提供以下访问接口:

MX-DCM的命令行工具(mx-dcmi)

调用RESTful API

集成了MX-DCM C API库(mxdcml)的第三方管理工具

1.1. 功能特点

设备快速诊断

MX-DCM提供了各种级别的设备诊断功能。

任务级别的统计

通过使用MX-DCM,用户可以收集分析任务级别的GPU使用情况。

GPU分组管理

在数据中心环境中,一般一台主机会安装多张GPU,一个任务可能跑在多台主机上。为GPU分组的功能,将使用户的操作更便捷。

GPU管理策略

使用沐曦的设备可以方便地识别定位问题。管理策略的设置,可以帮助设备自动从故障中恢复,隔离有问题的硬件,从而保证高可靠性,并简化设备管理工作。

1.2. 目标用户

MX-DCM主要面向以下用户:

需要管理GPU设备的数据中心运维人员

需要使用mxdcml的软件开发人员

需要深入了解GPU行为的技术人员以及现场应用工程师

2. 安装与运行

2.1. 用户须知

2.1.1. 支持的平台

支持曦云® 系列GPU。

支持的软硬件平台兼容列表,参见《曦云® 系列通用计算GPU用户指南》中“系统支持范围”章节。

2.1.2. 系统模块

MX-DCM包含以下模块:

MX-DCM库(mxdcml)

mxdcml是MX-DCM的核心模块。mxdcml封装了底层通信以及设备管理细节,对外提供C语言接口,方便第三方工具调用。mxdcml提供静态库和动态库。

MX-DCM常驻进程(mx-dcmd)

mx-dcmd运行在安装有GPU的主机上,对外提供RESTful接口,执行GPU监控和管理功能。

MX-DCM命令行工具(mx-dcmi)

mx-dcmi运行在管理节点上。mx-dcmi接收用户命令,解析命令参数,通过调用mxdcml接口实现对远程主机上的设备进行管理。

2.2. 安装

安装包种类包括支持x86架构的deb、rpm包,以及x86与Arm的镜像。用户需根据操作系统解压相应压缩包。

2.2.1. 安装mx-dcmi

支持使用deb/rpm包安装。推荐安装在集群管理节点。

执行以下命令安装deb包。

sudo dpkg -i mxdcmi-x.x.x.x-x.x.x.x.amd64.deb

执行以下命令安装rpm包。

sudo rpm -ivh mxdcmi-x.x.x.x-x.x.x.x.x86_64.rpm

2.2.2. 安装mx-dcmd

支持使用deb/rpm包安装。需安装在带GPU卡的服务器中。

执行以下命令安装deb包。

sudo dpkg -i mxdcmd-x.x.x.x-x.x.x.x.amd64.deb

执行以下命令安装rpm包。

sudo rpm -ivh mxdcmd-x.x.x.x-x.x.x.x.x86_64.rpm

支持使用容器镜像。

执行以下命令加载镜像,根据架构选取相应的容器镜像。对于x86架构,选择amd64结尾的镜像;对于Arm架构,选择arm64结尾的镜像。

docker load -i mxdcmd_-x.x.x.x-x.x.x.x.amd64.xz docker load -i mxdcmd_-x.x.x.x-x.x.x.x.arm64.xz

2.3. 运行

2.3.1. mx-dcmd直接运行

mx-dcmd默认安装在/opt/mxdcm/bin/mx-dcmd,同时在/usr/local/bin下创建了软连接,可全局使用。

使用 mx-dcmd -h 命令查看启动时可以配置的参数:

USAGE:

mx-dcmd [-hv] [-c <FILE>] [-l <DIR>]

Where:

-c <FILE>, --config <FILE>

Path to the configuration file, default: /opt/mxdcm/config/mxdcmd.cfg

Either of:

-s, --stdout

Log to stdout, default: 0

-l <DIR>, --logdir <DIR>

Path to the log directory, default: ./mxdcmd-log

--, --ignore_rest

Ignores the rest of the labeled arguments following this flag.

-v, --version

Displays version information and exits.

-h, --help

Displays usage information and exits.

MetaX Data Center Management Daemon

以上参数均是可选的,可以直接运行 mx-dcmd 启动。

2.3.2. mx-dcmd容器内运行

使用沐曦发布的容器镜像,可以按照如下命令启动:

使用默认配置启动:

docker run -d --name=mx-dcmd --device=/dev/mxcd --device=/dev/dri -v /var/log:/var/log -v /opt/maca:/opt/maca -v /opt/mxdriver:/opt/mxdriver -p 0.0.0.0:<hostPort>:23412 <image name>

<hostPort>:mx-dcmd对外的HTTP监听端口--device=/dev/mxcd --device=/dev/dri:将主机上的沐曦设备挂载到容器--device=/dev/mxgvm:开启虚拟化时默认只有VF设备可操作,若需要在容器中操作PF,在启动容器时需将/dev/mxgvm挂载到容器。仅当开启虚拟化时使用-v /var/log:/var/log:将主机/var/log目录挂载到容器-v /opt/maca:/opt/maca:将MXMACA库挂载到容器-v /opt/mxdriver:/opt/mxdriver:将mxdriver挂载到容器,此参数需在MXMACA-C500-Driver-2.23及之后的版本中添加使用

使用自定义配置启动:

docker run -d --name=mx-dcmd --device=/dev/mxcd --device=/dev/dri -v /var/log:/var/log -v /opt/maca:/opt/maca -p 0.0.0.0:<hostPort>:<dockerPort> -v <hostCfg>:<dockerCfg> -v <hostLogDir>:<dockerLogDir> <image name> -c <dockerCfg> -l <dockerLogDir>

<dockerPort>:mx-dcmd在容器内启动后打开的监听端口<dockerCfg>:mx-dcmd在容器内使用的配置文件路径<dockerLogDir>:mx-dcmd在容器内运行保存日志的目录<hostPort>:主机对外暴露的mx-dcmd HTTP监听端口<hostCfg>:主机上的用户自定义配置文件路径<hostLogDir>:主机上保存mx-dcmd日志的目录

备注

当前启动容器方式暂不支持NIC的自检及MCCL测试。

2.3.3. mx-dcmi使用

mx-dcmi默认安装在/opt/mxdcm/bin/mx-dcmi,同时在/usr/local/bin下创建了软连接,可全局使用。

2.3.3.1. 使用帮助

mx-dcmi -h

执行命令后,显示如下:

USAGE:

mx-dcmi [-hv] subsystem

Where:

--, --ignore_rest

Ignores the rest of the labeled arguments following this flag.

-v, --version

Displays version information and exits.

-h, --help

Displays usage information and exits.

Subsystem <discovery|group|fieldgroup|dmon|stats|health|config|diag|topo|

nic|mccl|test>

(required) The desired subsystem to be accessed.

Subsystems available:

discovery Discovery GPUs on the system [mx-dcmi discovery -h for more info]

group GPU Group Management [mx-dcmi group -h for more info]

fieldgroup Field Group Management [mx-dcmi fieldgroup -h for more info]

dmon Stats Monitoring fo GPUs [mx-dcmi dmon -h for more info]

stats Process Statistics [mx-dcmi stats -h for more info]

diag System Validation/Diagnostic [mx-dcmi diag -h for more info]

health Health Monitoring [mx-dcmi health -h for more info]

topo GPU Topology [mx-dcmi topo -h for more info]

nic Nic info and diagnostic [mx-dcmi nic -h for more info]

mccl Mccl diagnostic [mx-dcmi mccl -h for more info]

MetaX Data Center Management Interface

2.4. 配置文件

mx-dcmd安装后,配置文件在/opt/mxdcm/config/mxdcmd.cfg。用户可以自行修改其中的配置参数。

可配置的参数包括 ip,port,loglevel 及各个monitor的初始状态。

配置文件示例:

# mx-dcmd config file

#ip = 1.1.1.1

# if port is not set, 23412 will be used

port = 23412

# 0:off, 1:debug, 2:info, 3:warn, 4:error

loglevel = 2

# 0:off, 1:on, default:on

#logMonitor = 0

#eventMonitor = 0

2.5. 日志文件

mx-dcmd运行时的日志文件默认保存在./mxdcmd-log/mxdcmd_<date>.log。

<date>表示程序启动时的日期。用户可以在mx-dcmd启动时通过

-l <DIR>参数修改日志存放的目录。若日志需要直接输出到终端,可在运行mx-dcmd时指定

-s,注意-s与-l不可同时使用。

3. MX-DCM功能

本章介绍MX-DCM的主要功能,以及 mx-dcmi 命令的使用示例。

3.1. 设备查询

用户可以使用设备查询功能查看指定主机上的可用设备。

3.1.1. 使用帮助

mx-dcmi discovery -h

执行命令后,显示如下:

USAGE:

mx-dcmi discovery [-hlv] [--gpuid <gpuid>] [--host <IP:PORT>]

Where:

-l, --list <FILE>

List all GPUs discovered on the host.

--host <IP:PORT>

The target host to connect.

--, --ignore_rest

Ignores the rest of the labeled arguments following this flag.

-v, --version

Displays version information and exits.

-h, --help

Displays usage information and exits.

MetaX Data Center Management Interface

3.1.2. 查看设备列表

mx-dcmi命令:

mx-dcmi discovery --host [IP]:[PORT] -l

操作成功,显示如下:

============= MetaX Data Center Management Interface Log ============= Timestamp : Wed Mar 6 15:00:00 2024 +--------+------------------------------------------------------------+ | GPU ID |-Device Information | +--------+------------------------------------------------------------+ | 0 |-Name: MXC500 | | |-PCI Bus ID: 0000:4f:00.0 | | |-Device UUID: GPU-dcf2c6b3-bf23-7535-8406-fa0d793df737 | +--------+------------------------------------------------------------+ | 1 |-Name: MXC500 | | |-PCI Bus ID: 0000:50:00.0 | | |-Device UUID: GPU-1323796f-71df-ea8c-ed3d-8389dbd9eef4 | +--------+------------------------------------------------------------+

RESTful API:

curl -i -X GET http://[IP]:[PORT]/api/v1/gpus

操作成功,显示如下:

HTTP/1.1 200 0K Content-type: application/json Content-Length: 206 {"gpus":[{"id":0,"bdfid":"0000:4f:00.0","uuid":"GPU-dcf2c6b3-bf23-7535-8406fa0d793df737","type":"MXC500"},{"id":1,"bdfid":"0000:50:00.0","uuid":"GPU-1323796f-71df-ea8c-ed3d-8389dbd9eef4","type":"MXC500"}]}

3.1.3. 查看指定设备的属性信息

mx-dcmi命令:

mx-dcmi discovery --host [IP]:[PORT] --gpu-id 0

操作成功,显示如下:

============= MetaX Data Center Management Interface Log ============= Timestamp : Wed Mar 6 15:00:00 2024 +---------------------------+-----------------------------------------+ | GPU ID: 0 |-Device Information | +---------------------------+-----------------------------------------+ | Device Name |-MXC500 | | PCI Bus ID |-0000:4f:00.0 | | Device UUID |-GPU-dcf2c6b3-bf23-7535-8406-fa0d793df737| | Serial Number |-T6R022_4_2_8 | | KMD Version |-2.1.0 | | VBIOS |-0.18.0.0 | +---------------------------+-----------------------------------------+ | Shut down Temperature(C) |-108.00 | | Slow down Temperature(C) |-95.00 | | Power Limit(W) |-350 | +--------------------------+------------------------------------------+

RESTful API:

curl -i -X GET http://[IP]:[PORT]/api/v1/gpus/gpu/0

操作成功,显示如下:

HTTP/1.1 200 0K Content-type: application/json Content-Length: 232 {"id":0,"bdfid":"0000:4f:00.0","uuid":"GPU-dcf2c6b3-bf23-7535-8406fa0d793df737","name":"MXC500","serialNumber":"T6R022_4_2_8","kmd":"2.1.0","vbios":"0.18.0.0","shutdownTemperature":"108.0","slowdownTemperature":"95.0","powerLimit":"350"}

3.2. 设备分组

在MX-DCM里,几乎所有的设备操作都是以设备组为单位。用户可以创建、删除以及更新设备组,并将设备组用于后续的操作。设备组是为了方便用户对多个设备执行相同的操作。不同的组所包含的设备集合可以有重复,因为不同组可以用于不同的管理需求。

Group 0是默认创建的设备组,包含所有的设备,不可更改。

3.2.1. 使用帮助

mx-dcmi group -h

执行命令后,显示如下:

USAGE:

mx-dcmi group [-hlv] [-a <>|-c <>|-d <[0, 63]>|-i|-r <>] [-g <[0, 63]>]

[--host <IP:PORT>]

Where:

-l, --list

List all groups.

--host <IP:PORT>

The target host to connect.

-g <[0, 63]>, --group <[0, 63]>

Group id

Either of:

-a <>, --add <>

Add devices to group

-c <>, --create <>

Create a group on the remote host.

-d <[0, 63]>, --delete <[0, 63]>

Delete a group on the remote host.

-r <>, --remove <>

Remove gpus from group

-i, --inquiry

Inquiry group information

--, --ignore_rest

Ignores the rest of the labeled arguments following this flag.

-v, --version

Displays version information and exits.

-h, --help

Displays usage information and exits.

MetaX Data Center Management Interface

3.2.2. 创建设备组

mx-dcmi命令:

mx-dcmi group --host [IP]:[PORT] -c group1

操作成功,显示如下:

create group group1 with a group ID of 1 successfully

RESTful API:

curl -i -X POST http:// [IP]:[PORT]/api/v1/groups/group1

操作成功,显示如下:

HTTP/1.1 200 OK Content-type: application/json Content-Length: 13 {"groupid": 1}

3.2.3. 添加设备到设备组

以下命令把gpu#0和gpu#1添加到设备组#1。

mx-dcmi命令:

mx-dcmi group --host [IP]:[PORT] -a 0,1 -g 1

操作成功,显示如下:

add gpus operation successfully

RESTful API:

curl -i -X PUT http://[IP]:[PORT]/api/v1/groups/1/gpu?gpuid=0,1

操作成功,显示如下:

HTTP/1.1 200 0K Content-Length: 0

3.2.4. 从设备组移除设备

以下命令从设备组#1移除gpu#0。

mx-dcmi命令:

mx-dcmi group --host [IP]:[PORT] -r 0 -g 1

操作成功,显示如下:

remove gpus operation successfully

RESTful API:

curl -i -X DELETE http:// [IP]:[PORT]/api/v1/groups/1/gpu?gpuid=0

操作成功,显示如下:

HTTP/1.1 200 0K Content-Length: 0

3.2.5. 查看设备组列表

mx-dcmi命令:

mx-dcmi group --host [IP]:[PORT] -l

操作成功,显示如下:

============= MetaX Data Center Management Interface Log ============= Timestamp : Fri Mar 8 16:52:49 2024 +--------------------------------------------------------------------+ | GROUPS | | 1 groups found | +--------------------------------------------------------------------+ +------------+-------------------------------------------------------+ | Group ID | 0 | | Group Name | DCM_ALL_SUPPORTED_GPUS | | GPU ID(s) | 0, 1, 2, 3, 4, 5, 6, 7 | +------------+-------------------------------------------------------+

RESTful API:

curl -i -X GET http:// [IP]:[PORT]/api/v1/groups

操作成功,显示如下:

HTTP/1.1 200 OK Content-type: application/json Content-Length: 86 {"gpugroups":[{"groupid":0,"name":"DCM_ALL_SUPPORTED_GPUS","gpus":[0,1,2,3,4,5,6,7]}]}

3.2.6. 查看设备组详细信息

mx-dcmi命令:

mx-dcmi group --host [IP]:[PORT] -i -g 1

操作成功,显示如下:

============= MetaX Data Center Management Interface Log ============= Timestamp : Fri Mar 8 17:11:38 2024 +------------+-----------------------------------------------------+ | GROUPS | +------------+-----------------------------------------------------+ +------------+-----------------------------------------------------+ | Group ID | 1 | | Group Name | group1 | | GPU ID(s) | 0, 1 | +------------+-----------------------------------------------------+

RESTful API:

curl -i -X GET http:// [IP]:[PORT]/api/v1/groups/0

操作成功,显示如下:

HTTP/1.1 200 OK Content-type: application/json Content-Length: 70 {"groupid":0,"name":"DCM_ALL_SUPPORTED_GPUS","gpus":[0,1,2,3,4,5,6,7]}

3.2.7. 删除设备组

mx-dcmi命令:

mx-dcmi group --host [IP]:[PORT] -d 1

操作成功,显示如下:

delete group 1 successfully

RESTful API:

curl -i -X DELETE http:// [IP]:[PORT]/api/v1/groups/1

操作成功,显示如下:

HTTP/1.1 200 0K Content-Length: 0

3.3. 指标分组

用户可以管理自定义的指标组,并将指标组用于设备或设备组的状态监控。

FieldGroup 0是默认创建的指标组,不可更改。

3.3.1. 使用帮助

mx-dcmi fieldgroup -h

执行命令后,显示如下:

USAGE:

mx-dcmi fieldgroup [-hv] {-c <groupName>]|-d|-i|-l|--list-fields} [-f

<fieldsId>][-g <[0,63]>] [--host <IP:PORT>]

Where:

--host <IP:PORT>

The target host to connect.

-g <[0,63]>, --fieldgroup <[0,63]>

The field group to query on the remote host.

-f <fieldsId>, --fields <fieldsId>

Comma-separated list of field ids to add to a field group when

Creating a new one

or Query target fields info when listing available fields

One of:

-c <groupName>, --create <groupName>

Create a field group on the remote host.

-i, --info

Get a field group info on the remote host.

-d, --delete

Delete a field group on the remote host.

-l, --list

List all field group.

--list-fields

List all available field.

MetaX Data Center Management Interface

3.3.2. 创建指标组

以下命令创建指标组create_fieldgroup,并添加指标 1, 2, 3, 4, 25。

mx-dcmi命令:

mx-dcmi fieldgroup --host [IP]:[PORT] -c create_fieldgroup -f 1-4,25

操作成功,显示如下:

create field group "create_fieldgroup" with a group ID of 1 successfully

RESTful API:

curl -i -X POST http://[IP]:[PORT]/api/v1/fieldgroups/create_fieldgroup?fields=1-4,25

操作成功,显示如下:

HTTP/1.1 200 0K Content-type: application/json Content-Length: 13 {"groupid":1}

3.3.3. 删除指标组

mx-dcmi命令:

mx-dcmi fieldgroup --host [IP]:[PORT] -d -g 1

操作成功,显示如下:

delete field group 1 successfully

RESTful API:

curl -i -X DELETE http://[IP]:[PORT]/api/v1/fieldgroups/1

操作成功,显示如下:

HTTP/1.1 200 0K Content-Length: 0

3.3.4. 查看指标组信息

mx-dcmi命令:

mx-dcmi fieldgroup --host [IP]:[PORT] -i -g 1

操作成功,显示如下:

============= MetaX Data Center Management Interface Log ============= Timestamp : Wed Mar 6 15:00:00 2024 +----------------------------------------------------------------+ | FIELD GROUP | +--------------------+-------------------------------------------+ | Field Group ID |-1 | | Field Group Name |-create_fieldgroup | | Field ID(s) |-1, 2, 3, 4, 25 | +--------------------+-------------------------------------------+

RESTful API:

curl -i -X GET http://[IP]:[PORT]/api/v1/fieldgroups/1

操作成功,显示如下:

HTTP/1.1 200 0K Content-type: application/json Content-Length: 62 {"groupid":1,"name":"create_fieldgroup","fields":{1,2,3,4,25}}

3.3.5. 查看指标组列表

mx-dcmi命令:

mx-dcmi fieldgroup --host [IP]:[PORT] -l

操作成功,显示如下:

============= MetaX Data Center Management Interface Log ============= Timestamp : Wed Mar 6 15:00:00 2024 +----------------------------------------------------------------+ | FIELD GROUP | | 2 field groups found | +--------------------+-------------------------------------------+ | Field Group ID |-0 | | Field Group Name |-DEFAULT_FIELD_GROUP | | Field ID(s) |-11, 13, 23, 24, 25, 31, 32, 33 | +--------------------+-------------------------------------------+ | Field Group ID |-1 | | Field Group Name |-create_fieldgroup | | Field ID(s) |-1, 2, 3, 4, 25 | +--------------------+-------------------------------------------+

RESTful API:

curl -i -X GET http://[IP]:[PORT]/api/v1/fieldgroups

操作成功,显示如下:

HTTP/1.1 200 0K Content-type: application/json Content-Length: 150 {"fieldgroups":[{"groupid":0,"name":"DEFAULT_FIELD_GROUP","fields":[11,13,23,24,25,31,32,33]},{"groupid":1,"name":"create_fieldgroup","fields":[1,2,3,4,25]}]}

3.3.6. 查看所有可用指标

mx-dcmi命令:

mx-dcmi fieldgroup --host [IP]:[PORT] --list-fields

操作成功,显示如下:

============= MetaX Data Center Management Interface Log ============= Timestamp : Wed Mar 6 15:00:00 2024 +----+-----------------------+----+-----------------------+ | Id | Field Name | Id | Field Name | +----------------------------+----+-----------------------+ | 0 | UNKNOWN | 18 | CCX_CLOCK | | 1 | DRIVER_VERSION | 19 | GPU_TEMP | | 2 | VBIOS_VERSION | 20 | SOC_TEMP | | 3 | FW_VERSION | 21 | TEMP_LIMIT | | 4 | SML_VERSION | 22 | PMBUS_POWER | | 5 | PROCESS_NAME | 23 | BOARD_POWER | | 6 | DEV_COUNT | 24 | GPU_UTIL | | 7 | DEV_NAME down | 25 | MEM_UTIL | | 8 | DEV_SML_INDEX | 26 | VPUE_UTIL | | 9 | DEV_SERIAL | 27 | VPUD_UTIL | | 10 | DEV_BDFID | 28 | PCIE_GEN | | 11 | GPU_CLOCK | 29 | PCIE_WIDTH | | 12 | CSC_CLOCK | 30 | PCIE_MAX_GEN | | 13 | MC_CLOCK | 31 | PCIE_TX | | 14 | SOC_CLOCK | 32 | PCIE_RX | | 15 | DNOC_CLOCK | 33 | ECC_STATE | | 16 | VPUE_CLOCK | 34 | MAX_FIELDS | | 17 | VPUD_CLOCK | | | +----------------------------+----+-----------------------+

RESTful API:

curl -i -X GET http://[IP]:[PORT]/api/v1/fields

操作成功,显示如下:

HTTP/1.1 200 0K Content-type: application/json Content-Length: 1008 {"fields":[{"id":0,"name":"UNKNOWN"},{"id":1,"name":"DRIVER_VERSION"},{"id":2,"name":"VBIOS_VERSION"},{"id":3,"name":"FW_VERSION"},{"id":4,"name":"SML_VERSION"},{"id":5,"name":"PROCESS_NAME"},{"id":6,"name":"DEV_COUNT"},{"id":7,"name":"DEV_NAME"},{"id":8,"name":"DEV_SML_INDEX"},{"id":9,"name":"DEV_SERIAL"},{"id":10,"name":"DEV_BDFID"},{"id":11,"name":"GPU_CLOCK"},{"id":12,"name":"CSC_CLOCK"},{"id":13,"name":"MC_CLOCK"},{"id":14,"name":"SOC_CLOCK"},{"id":15,"name":"DNOC_CLOCK"},{"id":16,"name":"VPUE_CLOCK"},{"id":17,"name":"VPUD_CLOCK"},{"id":18,"name":"CCX_CLOCK"},{"id":19,"name":"GPU_TEMP"},{"id":20,"name":"SOC_TEMP"},{"id":21,"name":"TEMP_LIMIT"},{"id":22,"name":"PMBUS_POWER"},{"id":23,"name":"BOARD_POWER"},{"id":24,"name":"GPU_UTIL"},{"id":25,"name":"MEM_UTIL"},{"id":26,"name":"VPUE_UTIL"},{"id":27,"name":"VPUD_UTIL"},{"id":28,"name":"PCIE_GEN"},{"id":29,"name":"PCIE_WIDTH"},{"id":30,"name":"PCIE_MAX_GEN"},{"id":31,"name":"PCIE_TX"},{"id":32,"name":"PCIE_RX"},{"id":33,"name":"ECC_STATE"},{"id":34,"name":"MAX_FIELDS"}]}

3.4. 状态监控

用户可以实时监控设备数据。

3.4.1. 使用帮助

mx-dcmi dmon -h

执行命令后,显示如下:

USAGE:

mx-dcmi dmon [-hv] [-g <[0, 63]>|-i <gpu-id>] [-e <fieldIds>|-f <[0,

63]>] [-c <count>] [-d <delay>] [--host <IP:PORT>]

Where:

--host <IP:PORT>

The target host to connect.

-c <count>, --count <count>

Integer representing how many times to loop before exiting, 0

represents forever.

-d <delay>, --delay <delay>

Integer(ms) representing how often to query results from DCMD and

print them.

Default: 1000ms, minimum: 1000ms.

Either of:

-i <gpu-id>, --gpu-id <gpu-id>

Gpu index, run mx-dcmi discovery --host ip:port -l to check list of

gpu ids.

-g <[0, 63]>, --group-id <[0, 63]>

The gpu group to query on the specified host.

Either of:

-e <fieldIds>, --field-id <fieldIds>

Field identifier to view/inject.

-f <[0, 63]>, --field-group-id <[0, 63]>

The field group to query on the specified host.

--, --ignore_rest

Ignores the rest of the labeled arguments following this flag.

-v, --version

Displays version information and exits.

-h, --help

Displays usage information and exits.

MetaX Data Center Management Interface

3.4.2. 开启设备监控

mx-dcmi命令:

mx-dcmi dmon --host [IP]:[PORT] -i 0 -e 11 -d 2000 -c 5

-e(指标)可替换为-f(指标组)。-d(可选参数)指定监控间隔,不指定时默认1s。-c(可选参数)指定监控次数,不指定时持续监控。

操作成功,显示如下:

gpu GPU_CLOCK id MHz 0 428 0 428 0 428 0 428 0 428

RESTful API:

curl -i -X GET http:// [IP]:[PORT]/api/v1/stats?gpu=0\&fields=11\&delay=2000\&count=5

fields(指标)可替换为fieldgroup(指标组)。delay(可选参数)指定监控间隔,不指定时默认1s。count(可选参数)指定监控次数,不指定时持续监控。

操作成功,显示如下:

HTTP/1.1 200 OK Content-Type: text/event-stream {"stats":[{"gpuid":0,"gpustats":[{"fieldid":11,"fieldname":"GPU_CLOCK","fieldunit":"MHz","value":"428"}]}]} {"stats":[{"gpuid":0,"gpustats":[{"fieldid":11,"fieldname":"GPU_CLOCK","fieldunit":"MHz","value":"428"}]}]} {"stats":[{"gpuid":0,"gpustats":[{"fieldid":11,"fieldname":"GPU_CLOCK","fieldunit":"MHz","value":"428"}]}]} {"stats":[{"gpuid":0,"gpustats":[{"fieldid":11,"fieldname":"GPU_CLOCK","fieldunit":"MHz","value":"428"}]}]} {"stats":[{"gpuid":0,"gpustats":[{"fieldid":11,"fieldname":"GPU_CLOCK","fieldunit":"MHz","value":"428"}]}]}

3.4.3. 开启设备组监控

mx-dcmi命令:

mx-dcmi dmon --host [IP]:[PORT] -g 0 -e 11 -d 2000 -c 5

-e(指标)可替换为-f(指标组)。-d(可选参数)指定监控间隔,不指定时默认1s。-c(可选参数)指定监控次数,不指定时持续监控。

操作成功,显示如下:

gpu GPU_CLOCK id MHz 0 428 1 428 0 428 1 428 0 428 1 428 0 428 1 428 0 428 1 428

RESTful API:

curl -i -X GET http:// [IP]:[PORT]/api/v1/stats?gpugroup=0\&fields=11\&delay=2000\&count=5

fields(指标)可替换为fieldgroup(指标组)。delay(可选参数)指定监控间隔,不指定时默认1s。count(可选参数)指定监控次数,不指定时持续监控。

操作成功,显示如下:

HTTP/1.1 200 OK Content-Type: text/event-stream {"stats":[{"gpuid":0,"gpustats":[{"fieldid":11,"fieldname":"GPU_CLOCK","fieldunit":"MHz","value":"428"}]},{"gpuid":1,"gpustats":[{"fieldid":11,"fieldname":"GPU_CLOCK","fieldunit":"MHz","value":"428"}]}]} {"stats":[{"gpuid":0,"gpustats":[{"fieldid":11,"fieldname":"GPU_CLOCK","fieldunit":"MHz","value":"428"}]},{"gpuid":1,"gpustats":[{"fieldid":11,"fieldname":"GPU_CLOCK","fieldunit":"MHz","value":"428"}]}]} {"stats":[{"gpuid":0,"gpustats":[{"fieldid":11,"fieldname":"GPU_CLOCK","fieldunit":"MHz","value":"428"}]},{"gpuid":1,"gpustats":[{"fieldid":11,"fieldname":"GPU_CLOCK","fieldunit":"MHz","value":"428"}]}]} {"stats":[{"gpuid":0,"gpustats":[{"fieldid":11,"fieldname":"GPU_CLOCK","fieldunit":"MHz","value":"428"}]},{"gpuid":1,"gpustats":[{"fieldid":11,"fieldname":"GPU_CLOCK","fieldunit":"MHz","value":"428"}]}]} {"stats":[{"gpuid":0,"gpustats":[{"fieldid":11,"fieldname":"GPU_CLOCK","fieldunit":"MHz","value":"428"}]},{"gpuid":1,"gpustats":[{"fieldid":11,"fieldname":"GPU_CLOCK","fieldunit":"MHz","value":"428"}]}]}

3.4.4. 停止监控

执行ctrl+c停止或者通过 -c/--count 指定监控次数。

3.5. 任务进程数据收集

mx-dcmd支持在后台收集数据、分析数据。这样,用户可以方便地收集任务级别的数据。

后台收集数据流程如图 3.1 所示。

图 3.1 后台收集数据流程

3.5.1. 使用帮助

mx-dcmi stats -h

执行命令后,显示如下:

USAGE:

mx-dcmi stats [-hv] {-j <jobName>]|-l|-r <jobName>|-s <jobName>|-x <jobName>} [-g <[0,63]>] [-m <duration>] [-u <interval>] [--host <IP:PORT>]

Where:

--host <IP:PORT>

The target host to connect.

-g <[0,63]>, --fieldgroup <[0,63]>

The gpu group to query on the job.

-u <interval>, --interval <interval>

How often to update the job stats in ms, default: 2000.

-m <duration>, --duration <duration>

How long to run the job in s, default: run till stop.

One of:

-s <jobName>, --jstart <jobName>

Start recording job statistics.

-x <jobName>, --jstop <jobName>

Stop recording job statistics.

-r <jobName>, --jremove <jobName>

Remove job statistics.

-j <jobName>, --job <jobName>

Display job statistics.

-l, --list

List all jobs.

--, --ignore_rest

Ignores the rest of the labeled arguments following this flag.

-v, --version

Displays version information and exits.

-h, --help

Displays usage information and exits.

MetaX Data Center Management Interface

3.5.2. 启动后台收集任务

以下命令创建并启动test_job,收集group0的信息,更新间隔2000 ms,更新6次后停止。

其中,更新次数:11s/2000ms = 6。

mx-dcmi命令:

mx-dcmi stats --host [IP]:[PORT] -s test_job -g 0 -u 2000 -m 11

操作成功,显示如下:

Successfully started recording stats for test_job

RESTful API:

curl -i -X POST http://[IP]:[PORT]/api/v1/job/test_job?group=0\&interval=2000\&duration=11

操作成功,显示如下:

HTTP/1.1 200 0K Content-Length: 0

3.5.3. 显示所有后台收集任务

mx-dcmi命令:

mx-dcmi stats --host [IP]:[PORT] -l

操作成功,显示如下:

============= MetaX Data Center Management Interface Log ============= Timestamp : Wed Mar 6 15:00:00 2024 +----------------------------------------------------------------+ | Job List | +-------------+----------------+---------------------------------+ | Job Name |-State | Gpus | +-------------+----------------+---------------------------------+ | test_job2 |-running |-0, 1, 2, 3, 4, 5, 6, 7 | | test_job |-stopped |-0, 1, 2, 3, 4, 5, 6, 7 | +-------------+----------------+---------------------------------+

RESTful API:

curl -i -X GET http://[IP]:[PORT]/api/v1/jobs

操作成功,显示如下:

HTTP/1.1 200 0K Content-Length: 143 {"jobs":[{"jobname":"test_job2","state":"running","gpus":[0,1,2,3,4,5,6,7]},{"jobname":"test_job","state":"stopped","gpus":[0,1,2,3,4,5,6,7]}]}

3.5.4. 停止后台收集任务

mx-dcmi命令:

mx-dcmi stats --host [IP]:[PORT] -x test_job

操作成功,显示如下:

Successfully stopped recording stats for test_job

RESTful API:

curl -i -X PUT http://[IP]:[PORT]/api/v1/job/test_job?status=stop

操作成功,显示如下:

HTTP/1.1 200 0K Content-Length: 0

3.5.5. 显示指定后台任务数据

mx-dcmi命令:

mx-dcmi stats --host [IP]:[PORT] -j test_job

操作成功,显示如下:

============= MetaX Data Center Management Interface Log ============= Timestamp : Wed Mar 6 15:00:00 2024 +-------------------------------------------------------------------+ | Summary | +-------------------------------+-----------------------------------+ | ----- Execution Stats ------- +-----------------------------------| | Start Time | Wed Mar 6 15:00:00 | | Update Time |-Wed Mar 6 15:00:12 | | Total Execution Time (sec) | 12 | | No. of Processes Time | 0 | | ----- Power Usage (Watts) ---+-----------------------------------| | GPU0 | Avg: 58.7, Max: 59.3 Min: 58.1 | | GPU1 |-Avg: 60.7, Max: 61.1 Min: 60.5 | | GPU2 | Avg: 56.8, Max: 57.1 Min: 56.5 | | GPU3 | Avg: 55.2, Max: 55.5 Min: 55.0 | | GPU4 | Avg: 62.5, Max: 62.8 Min: 62.3 | | GPU5 | Avg: 53.4, Max: 53.6 Min: 53.1 | | GPU6 | Avg: 55.6, Max: 55.9 Min: 55.1 | | GPU7 | Avg: 57.0, Max: 57.1 Min: 57.0 | | ----- Max Memory Used (KB) --+-----------------------------------| | GPU0 | 936048 | | GPU1 | 936048 | | GPU2 | 936048 | | GPU3 | 936048 | | GPU4 | 936048 | | GPU5 | 936048 | | GPU6 | 936048 | | GPU7 | 936048 | | ----- Event Stats -----------+-----------------------------------| | Single Bit ECC Errors | Not Specified | | Double Bit ECC Errors |-Not Specified | | ----- Overall Health --------+-----------------------------------| | Overall Health | Healthy | +-------------------------------+-----------------------------------+

RESTful API:

curl -i -X GET http://[IP]:[PORT]/api/v1/job/test_job

操作成功,显示如下:

HTTP/1.1 200 0K Content-type: application/json Content-Length: 830 {"starttime":"Wed Mar 6 15:00:00","updatetime":"Wed Mar 6 15:00:12","executetime":12,"processnumber":0,"devicenumber":8,"gpustatistics":[{"gpuid":0,"maxmemoryused":936048,"avgpower":58864,"maxpower":59262,"minpower":58055},{"gpuid":1,"maxmemoryused":936048,"avgpower":58864,"maxpower":59262,"minpower":58055},{"gpuid":2,"maxmemoryused":936048,"avgpower":58864,"maxpower":59262,"minpower":58055},{"gpuid":3,"maxmemoryused":936048,"avgpower":58864,"maxpower":59262,"minpower":58055},{"gpuid":4,"maxmemoryused":936048,"avgpower":58864,"maxpower":59262,"minpower":58055},{"gpuid":5,"maxmemoryused":936048,"avgpower":58864,"maxpower":59262,"minpower":58055},{"gpuid":6,"maxmemoryused":936048,"avgpower":58864,"maxpower":59262,"minpower":58055},{"gpuid":7,"maxmemoryused":936048,"avgpower":57023,"maxpower":57104,"minpower":56960}]}

3.5.6. 删除后台收集任务

mx-dcmi命令:

mx-dcmi stats --host [IP]:[PORT] -r test_job

操作成功,显示如下:

Successfully removed job test_job

RESTful API:

curl -i -X DELETE http://[IP]:[PORT]/api/v1/job/test_job

操作成功,显示如下:

HTTP/1.1 200 0K Content-Length: 0

3.6. 设备健康状态监控

用户可以查看设备健康状态。

3.6.1. 使用帮助

mx-dcmi health -h

执行命令后,显示如下:

USAGE:

mx-dcmi health [-hv] [-c|-d|-e <type>|--clear] [-g <[0, 63]>] [--host

<IP:PORT>]

Where:

--host <IP:PORT>

The target host to connect.

-g <[0, 63]>, --group <[0, 63]>

Group id

Either of:

-c, --check

Check to see if any errors or warnings have occurred in the enabled

monitors.

-d, --disable

Disable all monitors.

-e <type>, --enable <type>

Enable specified monitor(s).

a - all monitors

l - log monitor

e - event monitor

Note: 'le' is valid for enabling log&event monitors

--clear

Clear errors and warnings have detected in the enabled monitors.

Note: driver exception won't be cleared.

--, --ignore_rest

Ignores the rest of the labeled arguments following this flag.

-v, --version

Displays version information and exits.

-h, --help

Displays usage information and exits.

MetaX Data Center Management Interface

3.6.2. 检查设备健康状态

以下命令检查设备组#1在启用的监控项中是否发生告警。

mx-dcmi命令:

mx-dcmi health --host [IP]:[PORT] -c -g 1

操作成功,显示如下:

============= MetaX Data Center Management Interface Log ============= Timestamp : Mon Mar 11 11:59:29 2024 +----------------+-----------------------------------------------+ | Overall Health | Healthy | +----------------+-----------------------------------------------+ | GPU ID: 0 | Healthy | +----------------+-----------------------------------------------+ | GPU ID: 1 | Healthy | +----------------+-----------------------------------------------+

RESTful API:

curl -i -X GET http://[IP]:[PORT]/api/v1/health/gpugroup/1

操作成功,显示如下:

HTTP/1.1 200 OK Content-type: application/json Content-Length: 254 {"grouphealthinfo":"Healthy","driverexception":[],"gpuhealthinfo":[{"gpuid":0,"healthinfo":{"errorlog":[],"fatallog":[],"hwexception":[],"memoryexception":[]}},{"gpuid":1,"healthinfo":{"errorlog":[],"fatallog":[],"hwexception":[],"memoryexception":[]}}]}

3.6.3. 启用监控项

以下命令启用Log和Event监控。

mx-dcmi命令:

mx-dcmi health --host [IP]:[PORT] -e le

操作成功,显示如下:

Successfully enable health monitor, type 3.

RESTful API:

curl -i -X POST http://[IP]:[PORT]/api/v1/health/3

操作成功,显示如下:

HTTP/1.1 200 OK Content-Length: 0

3.6.4. 禁用所有监控项

以下命令禁用所有监控项。

mx-dcmi命令:

mx-dcmi health --host [IP]:[PORT] -d

操作成功,显示如下:

Successfully disable health monitors.

RESTful API:

curl -i -X DELETE http://[IP]:[PORT]/api/v1/health

操作成功,显示如下:

HTTP/1.1 200 OK Content-Length: 0

3.6.5. 清除告警

以下命令清除设备组#1启用监控项中已经检测到的告警。

mx-dcmi命令:

mx-dcmi health --host [IP]:[PORT] --clear -g 1

操作成功,显示如下:

Successfully clear group 1 health monitors.

RESTful API:

curl -i -X DELETE http://[IP]:[PORT]/api/v1/health/gpugroup/1

操作成功,显示如下:

HTTP/1.1 200 OK Content-Length: 0

3.7. 设备自检

用户可以使用自检功能检查设备状况。自检前建议关闭虚拟化。

3.7.1. 使用帮助

mx-dcmi diag -h

执行命令后,显示如下:

USAGE:

mx-dcmi diag [-hjv] [-c <PATH>] [-g <[0, 63]>] [-r <[1|2|3|4|software|pcie|mxlk|ops|mem>] [--host <IP:PORT>]

Where:

--host <IP:PORT>

The target host to connect.

-g <[0, 63]>, --group <[0, 63]>

The group ID to query.

-c <PATH>, --configfile <PATH>

Path to the configuration file in json format.

-j, --json

Print detailed result in json format.

--generate-template

Generate diag config template file.

-r <1|2|3|4|software|pcie|mxlk|ops|mem>, --run <1|2|3|4|software|pcie|mxlk|ops|mem>

Run a diagnostic.

(Note: higher numbered tests include all beneath.)

1 - Quick (System Validation ~ seconds)

2 - Medium (Extended System Validation ~ 2 minutes)

3 - Long (System HW Diagnostics ~ 15 minutes)

4 - Extended (Longer-running System HW Diagnostics)

Specific module diagnostic to run by name

Module name: software, pcie, mxlk, ops, mem

-p <MXC500>, --product <MXC500>

Specify metax product name to generate diag config file.

Supported product: MXC500, MXC550

--, --ignore_rest

Ignores the rest of the labeled arguments following this flag.

-v, --version

Displays version information and exits.

-h, --help

Displays usage information and exits.

MetaX Data Center Management Interface

3.7.2. 自检项目

3.7.2.1. 自检项目说明

项目类别 |

所属级别 |

所属类型 |

说明 |

|---|---|---|---|

MxSml check |

Level 1 |

Deployment |

检查libmxsml.so是否可用。 |

Maca check |

Level 1 |

Deployment |

检查MXMACA软件栈是否可用。 |

Permission Check |

Level 1 |

Deployment |

检查用户权限。 |

Compiler Check |

Level 1 |

Deployment |

检查编译器是否可用。 |

Nouveau Check |

Level 1 |

Deployment |

检查Nouveau驱动是否禁用。 |

Environment Variable Check |

Level 1 |

Deployment |

检查环境变量设置。 |

Power Mode Check |

Level 1 |

Deployment |

检查是否开启高功耗模式,可通过 |

PCIe |

Level 2 |

Integration |

检查PCIe speed(GT/s),lanes和最大带宽(MB/s)。 |

MetaXLink |

Level 2 |

Integration |

检查MetaXLink speed(GT/s),lanes和最大带宽(MB/s)。 |

OPS |

Level 2 |

Hardware |

精度为INT8、BF16、FP16、FP32_vector、FP32_matrix、TF32的算力指标测试。自检配置文件对应 |

Memory |

Level 2 |

Hardware |

HBM申请大内存测试,及HBM带宽测试。 |

Gpu Burn |

Level 3 |

Hardware |

压力测试,检查功耗,使用率。自检配置文件及自检结果对应 |

Pulse Test |

Level 4 |

Hardware |

功耗波动测试。 |

Memory Test |

Level 4 |

Hardware |

共有11种类型的Memory测试。 |

3.7.2.2. 自检项目诊断时间

自检级别 |

诊断时间(参考) |

|---|---|

Level 1 |

< 2 秒 |

Level 2 |

< 2 分钟 |

Level 3 |

< 30 分钟 |

Level 4 |

< 2 小时 |

3.7.2.3. 基准配置文件

执行Level 2 ~ Level 4自检前,需要用户提供包含检查项目基准数据的json文件(Level 1自检无须基准配置文件)。如果相关检查项目没有设置基准数据,则会跳过该项检查。如果检查结果≥基准数据,则该项检查通过;否则失败。

执行以下命令可生成默认指标配置文件diag-config-MXC500.json。

mx-dcmi命令:

mx-dcmi diag –-generate-template --host [IP]:[PORT] --product <MXC500|MXC550>

其中 --product 为产品名称,目前支持MXC500及MXC550,若不填则默认生成MXC500配置文件。

以下为diag-config-MXC500.json的内容,用户可根据实际期望进行调整。

{

"pcie": {

"speed": 32,

"width": 16,

"bw_h2d": 20000

"bw_d2h": 20000

"bw_h2d_d2h": 50000

"bw_uni_p2p": 30000

"bw_bi_p2p": 50000

},

"metaxlink": {

"bw_uni_p2p": 49000,

"bw_bi_p2p": 90000,

"speed": 32,

"width": 16

},

"ops": {

"int8": 472,

"bf16": 238,

"fp16": 238,

"fp32_matrix": 29,

"tf32": 119,

"fp32_vector": 14

},

"memory": {

"max_alloc_siza": 63000,

"bandwidth": 1400000

},

"stress": {

"power(W)": 340,

"usage(%)": 95,

"duration(s)": 20

},

"pulse": {

" duration(s)": 360

},

"memory": {

"test_indexes": [0,1,2,3,4,5,6,7,8,9,10]

}

}

3.7.3. 自检示例

3.7.3.1. Level 1自检

mx-dcmi命令:

mx-dcmi diag --host [IP]:[PORT] -r 1

操作成功,显示如下:

============= MetaX Data Center Management Interface Log =============

Timestamp : Mon Mar 11 14:36:07 2024

+-----------------------------+--------------------------------------+

| Diagnostic | Result |

+-----------------------------+--------------------------------------+

| ------ Deployment ----------+--------------------------------------|

| MxSml-Library | Pass |

| Maca Library | Pass |

| Permission Check | Pass |

| Compiler Check | Pass |

| Nouveau Check | Pass |

| Environment Variable Check | Pass |

| Power Mode Check | Pass |

+-----------------------------+--------------------------------------+

3.7.3.2. RESTful API方式自检

获取MetaXLink自检结果,其中 -d 的入参为期望的MetaXLink指标结果。MetaXLink测试属于Level 2检测,对于其他Level 2检测项不予检测,显示为 skip。

mx-dcmi命令:

curl -i -X POST -d '{"metaxlink": {"bw_uni_p2p": 92000,"bw_bi_p2p": 181000,"speed": 32,"width": 16}}' http://[IP]:[PORT]/api/v1/diag/mxlk

操作成功,显示如下:

HTTP/1.1 200 OK

Content-type: application/json

Content-Length: 1126

{

"software": {

"mxsml": "pass",

"maca": "pass",

"permission": "pass",

"compiler": "pass",

"nouveau": "pass",

"envvar": "pass",

"powermode": "pass"

},

"pcie": {

"result": "skip"

},

"metaxlink": {

"result": "pass",

"p2p_uni": [

{

"src": 0,

"dst": 1,

"bandwidth": 101677

},

{

"src": 1,

"dst": 0,

"bandwidth": 98684

}

],

"p2p_bi": [

{

"src": 0,

"dst": 1,

"bandwidth": 191145

}

],

"mxlkspeedwidth": [

{

"gpuid": 0,

"speed": 32.0,

"width": 16

},

{

"gpuid ": 1,

"speed": 32.0,

"width": 16

}

]

},

"perf": {

"result": "skip"

},

"memory": {

"memAllocResult": "skip",

"memBwResult": "skip"

}

}

3.7.3.3. 指定模块自检

用户可根据需要指定模块进行自检,以HBM为例。

mx-dcmi命令:

mx-dcmi diag --host [IP]:[PORT] -r mem -c mxdcm-diag.json

操作成功,显示如下:

============= MetaX Data Center Management Interface Log =============

Timestamp : Mon Mar 11 14:36:07 2024

+-------------------------+------------------------------------+

| Diagnostic | Result |

+-------------------------+------------------------------------+

| ------ Deployment ------+------------------------------------|

| MxSml Library | Pass |

| Maca Library | Pass |

| Permission Check | Pass |

| Compiler Check | Pass |

| Nouveau Check | Pass |

+-------------------------+------------------------------------+

| ------ Hardware --------+------------------------------------|

| Memory MAX | Pass |

| Memory Bandwidth | Pass |

+-------------------------+------------------------------------+

3.7.3.4. 显示json格式的自检结果

以下命令指定Level 1自检,以json格式显示结果。

mx-dcmi命令:

mx-dcmi diag --host [IP]:[PORT] -r 1 -j

操作成功,显示如下:

============= MetaX Data Center Management Interface Log =============

Timestamp : Mon Mar 11 14:36:26 2024

{

"software": {

"mxsml": "pass",

"maca": "pass",

"permission": "pass",

"compiler": "pass",

"nouveau": "pass"

}

}

3.8. 拓扑查询

用户可以使用拓扑查询功能查看GPU设备的拓扑连接。

3.8.1. 使用帮助

mx-dcmi topo -h

执行命令后,显示如下:

USAGE:

mx-dcmi topo [-hv] {-g |--gpuid } [--host ]

Where:

--host

The target host to connect.

One of:

--gpuid

The gpu ID to query.

-g , --group

The group ID to query.

--, --ignore_rest

Ignores the rest of the labeled arguments following this flag.

-v, --version

Displays version information and exits.

-h, --help

Displays usage information and exits.

MetaX Data Center Management Interface

3.8.2. 查看设备拓扑信息

mx-dcmi命令:

mx-dcmi topo --host [IP]:[PORT] {-g |--gpuid }

操作成功,显示如下:

============= MetaX Data Center Management Interface Log =============

Timestamp : Sat Apr 19 22:04:47 2025

+-------------------+-----------------------------------------------+

| GPU ID: 0 | Topology Information |

+-------------------+-----------------------------------------------+

| CPU Core Affinity | 0-39,80-119 |

+-------------------+-----------------------------------------------+

| To GPU 1 | Connected via a metaxlink |

+-------------------+-----------------------------------------------+

| To GPU 2 | Connected via a metaxlink |

+-------------------+-----------------------------------------------+

| To GPU 3 | Connected via a metaxlink |

+-------------------+-----------------------------------------------+

| To GPU 4 | Connected via a CPU-level link (the same numa)|

+-------------------+-----------------------------------------------+

| To GPU 5 | Connected via a CPU-level link (the same numa)|

+-------------------+-----------------------------------------------+

| To GPU 6 | Connected via a CPU-level link (the same numa)|

+-------------------+-----------------------------------------------+

| To GPU 7 | Connected via a CPU-level link (the same numa)|

+-------------------+-----------------------------------------------+

End of Log

3.9. 网卡诊断

用户可以使用自检功能检查单机网卡状况,也可对网卡进行单机和跨机的性能测试与压力测试。

3.9.1. 使用帮助

mx-dcmi nic -h

执行命令后,显示如下:

USAGE:

mx-dcmi nic [-hjlv] [--generate-template] [-c <PATH>] [-r <1|2|3>]

[--client-gpu-list <clientgpulist>] [--client-nic-list

<clientniclist>] [--gpu-id <gpuid>] [--host <IP:PORT>]

[--nic-id <nicid>] [--operation <[0, 2]>] [--server-gpu-id

<servergpuid>] [--server-nic-id <servernicid>]

[--server-num <servernum>] [--socket-address

<socketaddress>] [--test-mode <[0, 3]>] [--type <[0, 1]>]

Where:

-l, --list

List all Nic info on the host.

--host <IP:PORT>

The target host to connect.

--test-mode <[0, 3]>

Query diagnostic test-mode:

0 - do diagnostic on the target Nic

1 - do burn diagnostic on the target Nic

2 - start the target Nic as burn diagnostic server

3 - start the target Nic as burn diagnostic client

--nic-id <nicid>

Target Nic Id

--gpu-id <gpuid>

Target Gpu Id

--server-nic-id <servernicid>

Server Nic Id

--server-num <servernum>

Server Count

--server-gpu-id <servergpuid>

Server Gpu Id

--client-nic-list <clientniclist>

Client Nic List

--client-gpu-list <clientgpulist>

Client Gpu List

--socket-address <socketaddress>

Socket Address

--operation <[0, 2]>

Query diagnostic operation:

0 - Send (ib_send_*)

1 - Read (ib_read_*)

2 - Write (ib_write_*)

--type <[0, 1]>

Query diagnostic type:

0 - bw (ib_*_bw)

1 - lat (ib_*_lat)

-c <PATH>, --configfile <PATH>

Path to the configuration file in json format.

-j, --json

Print detailed result in json format.

--generate-template

Generate Nic diag config template file.

-r <1|2|3>, --run <1|2|3>

Run a Nic diagnostic.

(Note: higher numbered tests include all beneath.)

1 - Quick (Nic basic diagnostic ~ seconds)

2 - Medium (Nic diagnostic include ib write perftest ~ 15 minutes)

3 - Long (Nic diagnostic include ib write burntest ~ 30 minutes)

--, --ignore_rest

Ignores the rest of the labeled arguments following this flag.

-v, --version

Displays version information and exits.

-h, --help

Displays usage information and exits.

MetaX Data Center Management Interface

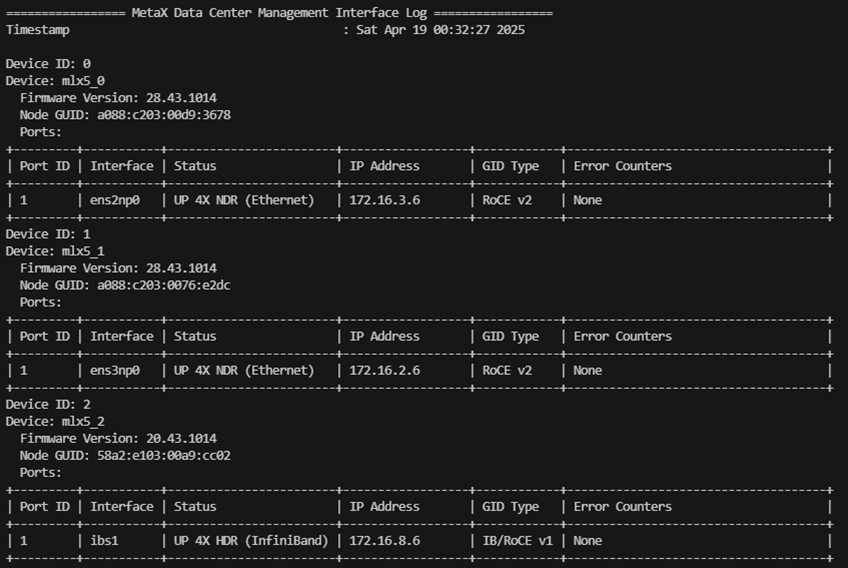

3.9.2. 查询网卡信息

mx-dcmi命令:

mx-dcmi nic --host [IP]:[PORT] -l [–-nic-id <nicid>]

如需显示指定网卡信息,使用 –-nic-id <nicid> 指定。

操作成功,显示如下:

图 3.2 查询网卡信息

3.9.3. 自检项目

3.9.3.1. 自检项目说明

项目类别 |

所属级别 |

说明 |

|---|---|---|

Driver Version Check |

Level 1 |

检测网卡驱动版本 |

Firmware Version Check |

Level 1 |

检测网卡固件版本 |

Kernel Syslog Check |

Level 1 |

检查网卡在内核中的日志 |

Device Name Check |

Level 1 |

检测网卡的名称 |

Port State Check |

Level 1 |

监测网卡端口状态(UP/DOWN)IP地址 |

Error counters Check |

Level 1 |

检测网卡的错误计数器 |

Interface Name Check |

Level 1 |

检测网卡接口名称 |

Perf test byself (write) for all nic |

Level 2 |

对本服务器所有网卡进行性能测试(RDMA写入) |

Perf burn test (write) for all nic |

Level 3 |

对本服务器所有网卡进行压力测试(RDMA写入) |

高级别的检测包含所有低级别的检测内容。若低级别的测试结果中有失败,则不会执行高级别的测试,显示为 Skip。

例如,执行Level 3的测试时,Level 2的测试结果为失败,则默认不执行Level 3的测试内容,Level 3的测试结果为 Skip。

3.9.3.2. 基准配置文件

执行Level 1自检前,可用以下命令生成环境对应的配置文件nic-diag-config.json。生成的配置文件中网卡的信息仅包含与设备相连接的网卡,其他网卡默认不在其中。

参见 3.9.2 查询网卡信息 获取对应网卡的 ip_address 信息。

若相关检查项目内容为空,则会跳过该项检查;若检查结果等同于配置文件中的内容,则该项检查通过,否则失败。

mx-dcmi命令:

mx-dcmi nic --generate-template --host [IP]:[PORT]

以下 nic-diag-config.json 仅为示例,用户可根据实际需要进行调整。

{

"Driver Version": "xxx",

"Firmware Version": "xxx",

"Nic Status": {

"port state": "UP 4X NDR (InfiniBand)",

"device names": [

"mlx5_0",

"mlx5_1"

],

"interface names": [

"ens2np0",

"enp3np0"

],

"ip address": ["172.16.2.1", "172.16.3.1"]

}

}

3.9.4. 自检示例

3.9.4.1. Level 1自检

mx-dcmi命令:

mx-dcmi nic --host [IP]:[PORT] -r 1 -c <config_file>

操作成功,显示如下:

============= MetaX Data Center Management Interface Log =============

Timestamp : Thu Apr 24 09:22:29 2025

+------------------------------+------------------------------------+

| Diagnostic | Result |

+------------------------------+------------------------------------+

| ------ NIC Self Check -------+------------------------------------|

| Driver Version Check | Pass |

| Firmware Version Check | Pass |

| Kernel Syslog Check | Pass |

| Device Names Check | Pass |

| Port State Check | Pass |

| Error Counters Check | Pass |

| Interface Names Check | Pass |

| Ip Address Check | Pass |

+------------------------------+------------------------------------+

End of Log

3.9.4.2. 显示json格式的自检结果

以下命令指定Level 2自检,以json格式显示结果。

mx-dcmi命令:

mx-dcmi nic --host [IP]:[PORT] -c nic-diag-config.json -r 2 -j

操作成功,显示如下:

{"Driver version":{"Result":"pass"},"Firmware version":{"Result":"pass"},"Kernel syslog":{"Result":"pass"},"Device names":{"Result":"pass"},"Port state":{"Result":"pass"},"Interface names":{"Result":"pass"},"Ip address":{"Result":"pass"},"Error counters":{"Result":"pass"},"Perftest":{"Result":"fail","Failed Target":["mlx5_0","mlx5_1"]}}

3.9.5. 单机RDMA性能测试

3.9.5.1. 基本命令格式

用户可根据自己的需求自由组合测试,且可同GPUDirect结合使用,以评估网络和GPU的性能。

备注

当测试结果不理想时,会给出具体原因分析及建议。

mx-dcmi命令:

mx-dcmi nic --host [IP]:[PORT] --test-mode 0 --nic-id <nicid> [--operation <0|1|2>] [--type <0|1>] [--gpu-id <gpuid>]

参数 |

说明 |

|---|---|

|

被测服务器IP地址及mxdcmd服务端口 |

|

指定测试模式, |

|

网卡ID |

|

指定RDMA测试类型。 |

|

指定测试类型。 |

|

指定目标设备ID进行GPU Direct测试 |

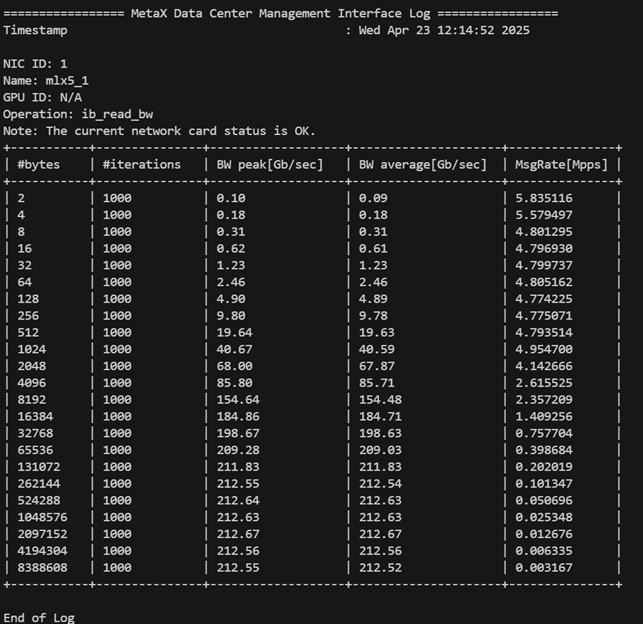

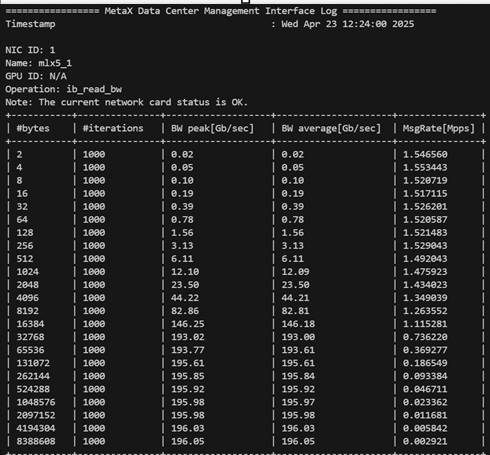

3.9.5.2. 示例1:读取数据带宽测试

mx-dcmi命令:

mx-dcmi nic --host [IP]:[PORT] --test-mode 0 --nic-id 1 --operation 1 --type 0

操作成功,显示如下:

图 3.3 读取数据带宽测试

3.9.5.3. 示例2:写入数据带宽测试(RESTful API)

mx-dcmi命令:

curl -i -X POST http://[IP]:[PORT]/api/v1/nic/ibs/ibtest?testmode=r\&server=3\&operation=write\&type=bw

操作成功,显示如下:

HTTP/1.1 200 OK

Content-type: application/json

Content-type: application/json

Content-Length: 3372

{"results":[{"deviceId":3,"name":"mlx5_2","operation":"ib_write_bw","type":0,"result":[

{"Bytes":2,"Iterations":5000,"BW Peak":0.06857100129127502,"BW Average":0.0682080015540123,"Msg Rate":4.262992858886719,"analysisResult":0},

{"Bytes":4,"Iterations":5000,"BW Peak":0.12999999523162842,"BW Average":0.12999999523162842,"Msg Rate":3.9534409046173096,"analysisResult":0},

{"Bytes":8,"Iterations":5000,"BW Peak":0.23999999463558197,"BW Average":0.23999999463558197,"Msg Rate":3.7158620357513428,"analysisResult":0},

{"Bytes":16,"Iterations":5000,"BW Peak":0.46000000834465027,"BW Average":0.46000000834465027,"Msg Rate":3.588594913482666,"analysisResult":0},

{"Bytes":32,"Iterations":5000,"BW Peak":0.9100000262260437,"BW Average":0.9100000262260437,"Msg Rate":3.5382909774780273,"analysisResult":0},

{"Bytes":64,"Iterations":5000,"BW Peak":1.6100000143051147,"BW Average":1.6100000143051147,"Msg Rate":3.1451549530029297,"analysisResult":0},

{"Bytes":128,"Iterations":5000,"BW Peak":3.4000000953674316,"BW Average":3.4000000953674316,"Msg Rate":3.3210930824279785,"analysisResult":0},

{"Bytes":256,"Iterations":5000,"BW Peak":6.440000057220459,"BW Average":6.440000057220459,"Msg Rate":3.1433870792388916,"analysisResult":0},

{"Bytes":512,"Iterations":5000,"BW Peak":12.899999618530273,"BW Average":12.850000381469727,"Msg Rate":3.1373159885406494,"analysisResult":0},

{"Bytes":1024,"Iterations":5000,"BW Peak":26.030000686645508,"BW Average":26.020000457763672,"Msg Rate":3.1757090091705322,"analysisResult":0},

{"Bytes":2048,"Iterations":5000,"BW Peak":63.47999954223633,"BW Average":63.380001068115234,"Msg Rate":3.868633985519409,"analysisResult":0},

{"Bytes":4096,"Iterations":5000,"BW Peak":118.8499984741211,"BW Average":118.29000091552734,"Msg Rate":3.6099469661712646,"analysisResult":0},

{"Bytes":8192,"Iterations":5000,"BW Peak":192.2100067138672,"BW Average":191.22999572753906,"Msg Rate":2.917980909347534,"analysisResult":0},

{"Bytes":16384,"Iterations":5000,"BW Peak":207.5800018310547,"BW Average":207.5,"Msg Rate":1.5830650329589844,"analysisResult":0},

{"Bytes":32768,"Iterations":5000,"BW Peak":210.83999633789063,"BW Average":210.1999969482422,"Msg Rate":0.8018640279769897,"analysisResult":0},

{"Bytes":65536,"Iterations":5000,"BW Peak":211.94000244140625,"BW Average":211.94000244140625,"Msg Rate":0.40423500537872314,"analysisResult":0},

{"Bytes":131072,"Iterations":5000,"BW Peak":212.13999938964844,"BW Average":212.1199951171875,"Msg Rate":0.20229299366474152,"analysisResult":0},

{"Bytes":262144,"Iterations":5000,"BW Peak":212.22999572753906,"BW Average":212.22999572753906,"Msg Rate":0.10119900107383728,"analysisResult":0},

{"Bytes":524288,"Iterations":5000,"BW Peak":212.17999267578125,"BW Average":212.13999938964844,"Msg Rate":0.050579000264406204,"analysisResult":0},

{"Bytes":1048576,"Iterations":5000,"BW Peak":212.22000122070313,"BW Average":212.1199951171875,"Msg Rate":0.025287000462412834,"analysisResult":0},

{"Bytes":2097152,"Iterations":5000,"BW Peak":212.17999267578125,"BW Average":212.0500030517578,"Msg Rate":0.012639000080525875,"analysisResult":0},

{"Bytes":4194304,"Iterations":5000,"BW Peak":212.17999267578125,"BW Average":212.0500030517578,"Msg Rate":0.006320000160485506,"analysisResult":0},

{"Bytes":8388608,"Iterations":5000,"BW Peak":212.1199951171875,"BW Average":212.0800018310547,"Msg Rate":0.003160000080242753,"analysisResult":0}]}]}

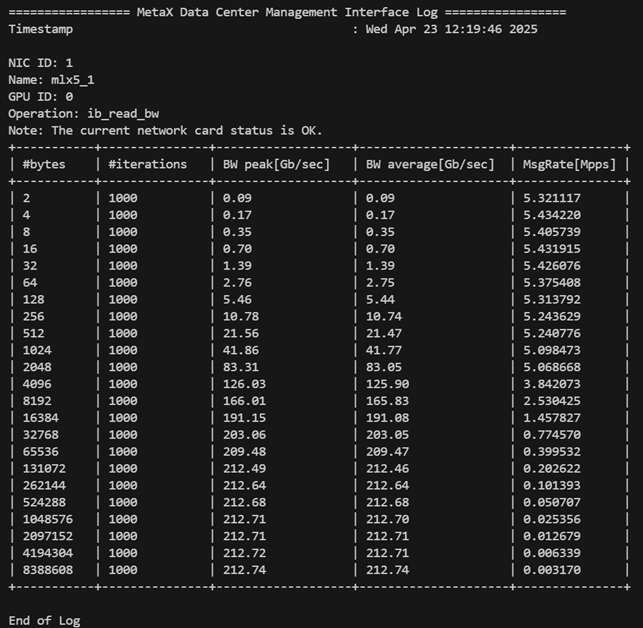

3.9.5.4. 示例3:读取数据延时测试(GPUDirect)

mx-dcmi命令:

mx-dcmi nic --host [IP]:[PORT] --test-mode 0 --nic-id 1 --operation 0 --type 1 --gpu-id 0

操作成功,显示如下:

图 3.4 读取数据延时测试

3.9.6. 单机RDMA压力测试

3.9.6.1. 基本命令格式

用户可根据自己的需求自由组合测试单机和跨机环境下的RDMA 压力测试,包括GPUDirect支持的场景。

备注

当测试结果不理想时,会给出具体原因分析及建议。

mx-dcmi命令:

mx-dcmi nic --host [IP]:[PORT] –-test-mode 1 --server-nic-id <servernicid> --client-nic-list <clientniclist> [--operation <0|1|2>] [--type <0|1>] [--server-gpu-id <servergpuid>] [--client-gpu-list <clientgpulist>]

参数 |

说明 |

|---|---|

|

被测服务器IP地址及mxdcmd服务端口 |

|

指定测试模式, |

|

指定测试的服务端网卡ID,注意需要指定与GPU相连的网卡ID,若为管理口及存储口,则不能测试 |

|

指定测试的客户端网卡ID,注意需要指定与GPU相连的网卡ID,若为管理口及存储口,则不能测试。当有多个网卡需要测试时,需用 “,” 分隔,如:1,2,3 |

|

指定测试的服务端设备ID进行GPUDirect测试 |

|

指定测试的客户端设备ID进行GPUDirect测试,个数需与 |

|

指定RDMA测试类型。 |

|

指定测试类型。 |

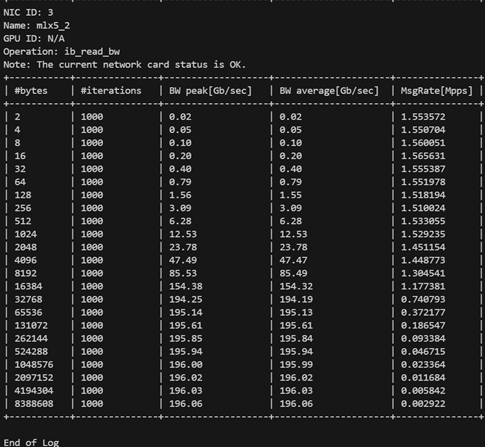

3.9.6.2. 示例1:读取数据带宽压力测试

mx-dcmi nic --host [IP]:[PORT] --test-mode 1 --server-nic-id 0 --client-nic-list 1,3 --operation 1

操作成功,网卡1测试结果显示如下:

图 3.5 读取数据带宽压力测试网卡1结果

网卡3测试结果显示如下:

图 3.6 读取数据带宽压力测试网卡3结果

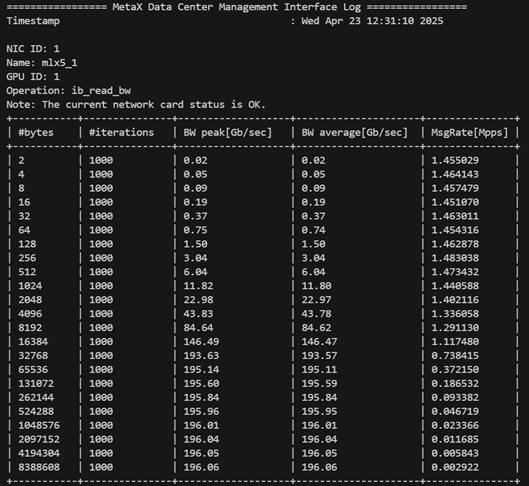

3.9.6.3. 示例2:读取数据带宽压力测试(GPUDirect)

mx-dcmi nic --host [IP]:[PORT] --test-mode 1 --server-nic-id 0 --client-nic-list 1,3 --server-gpu-id 0 --client-gpu-list 1,2 --operation 1

操作成功,网卡1测试结果显示如下:

图 3.7 GPUDirect读取数据带宽压力测试网卡1结果

网卡3测试结果显示如下:

图 3.8 GPUDirect读取数据带宽压力测试网卡3结果

3.9.7. 跨机RDMA压力测试

3.9.7.1. 基本命令格式

用户可根据自己的需求自由组合测试单机和跨机环境下的RDMA 压力测试,包括GPUDirect支持的场景。

备注

当测试结果不理想时,会给出具体原因分析及建议。

服务端:

mx-dcmi nic --host [IP1]:[PORT1] –-test-mode 2 --server-nic-id <servernicid> --server-num <servernum> [--operation <0|1|2>] [--type <0|1>] [--server-gpu-id <servergpuid>]

参数 |

说明 |

|---|---|

|

被测服务器IP地址及mxdcmd服务端口 |

|

指定测试模式, |

|

指定测试的服务端网卡ID,注意需要指定与GPU相连的网卡ID,若为管理口及存储口,则不能测试 |

|

指定测试的服务端端口个数,个数需与对应的list中ID个数相同,当大于1时,仅返回第一个端口。该端口在测试结束后会释放,如要继续测试,则需重启动Server及Client |

|

指定测试的服务端设备ID进行GPUDirect测试 |

|

指定RDMA测试类型。 |

|

指定测试类型。 |

客户端:

mx-dcmi nic --host [IP2]:[PORT2] –-test-mode 3 --client-nic-list <clientniclist> --socket-address [IP1]:[PORT3] [--operation <0|1|2>] [--type <0|1>] [--client-gpu-list <clientgpulist>]

参数 |

说明 |

|---|---|

|

被测服务器IP地址及mxdcmd服务端口 |

|

指定测试模式, |

|

指定测试的客户端网卡ID,注意需要指定与GPU相连的网卡ID,若为管理口及存储口,则不能测试。当有多个网卡需要测试时,需用 “,” 分隔,如:1,2,3 |

|

指定测试要连接的服务端IP和端口号,指定时为Server端返回的IP及端口,若IP为内部IP地址,则需改为Server所在的HOST IP |

|

指定测试的客户端设备ID进行GPUDirect测,个数需与 |

|

指定RDMA测试类型。0为发送(send)操作, |

|

指定测试类型。 |

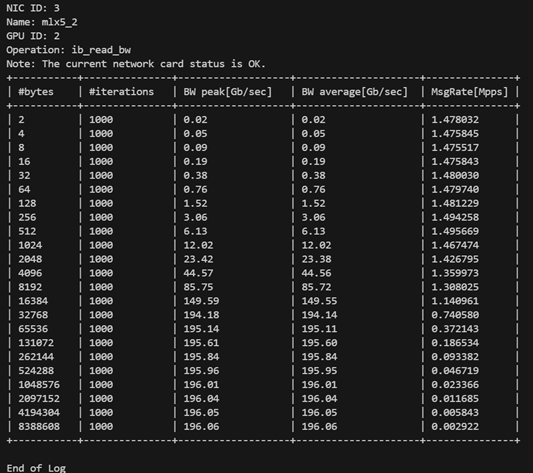

3.9.7.2. 示例1:跨机写入数据带宽压力测试

服务端:

mx-dcmi nic --host [IP1]:[PORT1] --test-mode 2 --server-nic-id 6 --server-num 2

命令返回socket address 用于客户端使用。

操作成功,显示如下:

============= MetaX Data Center Management Interface Log =============

Timestamp : Wed Apr 23 12:50:54 2025

Socket Address: 172.17.26.36:23515

客户端:

mx-dcmi nic --host [IP2]:[PORT2] --test-mode 3 --client-nic-list 3,4 --socket-address [IP1]:23515

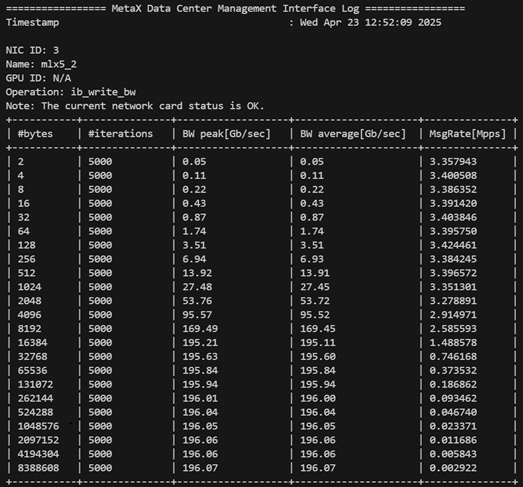

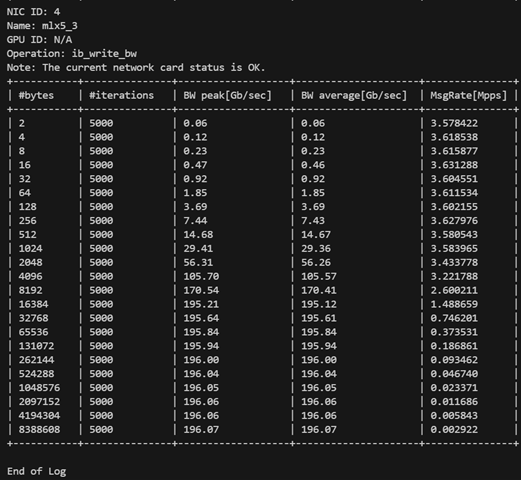

操作成功,网卡3测试结果显示如下:

图 3.9 跨机写入数据带宽压力测试网卡3结果

网卡4测试结果显示如下:

图 3.10 跨机写入数据带宽压力测试网卡4结果

3.9.7.3. 示例2:跨机写入数据带宽压力测试(GPUDirect)

服务端:

mx-dcmi nic --host [IP1]:[PORT1] --test-mode 2 --server-nic-id 4 --server-gpu-id 3 --server-num 2

命令返回socket address 用于客户端使用。

操作成功,显示如下:

============= MetaX Data Center Management Interface Log =============

Timestamp : Wed Apr 23 12:39:27 2025

Socket Address: 172.17.22.36:21515

客户端:

mx-dcmi nic --host [IP2]:[PORT2] --test-mode 3 --client-gpu-list 1,3 --client-nic-list 0,3 --socket-address [IP1]:21515

操作成功,网卡1测试结果显示如下:

图 3.11 GPUDirect跨机写入数据带宽压力测试网卡1结果

网卡3测试结果显示如下:

图 3.12 GPUDirect跨机写入数据带宽压力测试网卡3结果

3.10. MCCL诊断

用户可使用 mccl 子命令对网卡执行MCCL测试。主要功能为生成测试配置模板、执行MCCL压力测试、查询测试结果以及测试任务管理。

前提条件:

集群环境中,被测服务器已部署多个GPU设备及Infiniiband网卡,且配置一致。

mx-dcmd 可部署于参与MCCL测试的主机,或集群中与测试主机配置一致的其他主机;无需在集群的每台被测服务器上部署mx-dcmd。

mx-dcmi 正常运行在管理节点、不参加测试的服务器、参加测试的服务器均可。

服务器之间已设置免密登录。

3.10.1. 使用帮助

mx-dcmi mccl -h

执行命令后,显示如下:

USAGE:

mx-dcmi mccl [-hv] {-g |-j |-l|-r |-s |

-x } [-c ] [--host ]

Where:

--host

The target host to connect.

-c , --configfile

Path to the configuration file in json format. Must be used in

conjunction with -s/--start.

One of:

-s , --start

Start Mccl stress job with the configuration file.

-x , --stop

Stop Mccl stress job.

-r , --remove

Remove Mccl stress job.

-j , --job

Display Mccl stress result.

-g , --generate-template

Generate Mccl diag config template file with wanted ip list.

-l, --list

List all Mccl stress job info on the host.

--, --ignore_rest

Ignores the rest of the labeled arguments following this flag.

-v, --version

Displays version information and exits.

-h, --help

Displays usage information and exits.

MetaX Data Center Management Interface

3.10.2. 配置文件

执行MCCL前需要用户提供MCCL测试所需参数配置。

执行以下命令可生成默认指标配置文件 mccl-diag-config.json。

mx-dcmi命令:

mx-dcmi mccl --host [IP]:[PORT] –g "<PATH>"

[IP]:[PORT]为收集MCCL日志的服务器,已启动mx-dcmd且正常运行。<PATH>指定被测主机列表全路径,如"/home/user/ip.txt",当为""时,将根据[IP]:[PORT]信息自动生成一份配置文件。

以下 mccl-diag-config.json 仅为示例,用户可根据实际需要进行调整。

{

"perf_type": "all_gather_perf",

"mgmt_interface_name": "bond0",

"mgmt_ip_mask": "10.200.146.192/26",

"mgmt_ip_address": "10.200.146.235,10.200.146.226",

"node": "3",

"nic_dev": "mlx5_4, mlx5_1, mlx5_6, mlx5_3, mlx5_2, mlx5_0, mlx5_7, mlx5_5",

"duration": "43200"

}

项目类别 |

说明 |

|---|---|

|

测试类型,目前支持 |

|

服务器管理口名称 |

|

服务器管理口掩码,当跨地址段时,需手动修改该参数 |

|

被测服务器地址列表,可直接手动修改配置文件,生成配置文件传入的IP列表会全部显示在这里 |

|

每组参与测试的服务器个数,若需跑单机,可填 |

|

与GPU设备相连接的网卡列表,可根据实际情况手动修改 |

|

单位:秒,测试持续时间,默认为43200秒(24小时) |

3.10.3. MCCL测试基本命令

本章节以测试任务 mccl_test 为例。

3.10.3.1. 启动MCCL测试

mx-dcmi命令:

mx-dcmi mccl --host [IP]:[PORT] --start mccl_test --configfile <config_file>

--host为收集MCCL日志的服务器,已启动mx-dcmd且正常运行。--start传入任务名称(jobname)。--configfile传入可用于测试的配置文件。

备注

最多可创建64个任务。

操作成功,显示如下:

Successfully started Mccl diag for mccl_test

3.10.3.2. 查询测试任务状态

待MCCL测试开始3分钟后可查询测试任务状态。

mx-dcmi命令:

mx-dcmi mccl --host [IP]:[PORT] -l

操作成功,显示如下:

============= MetaX Data Center Management Interface Log =============

Timestamp : Mon Apr 21 16:52:49 2025

Mccl stress job list

+--------------------+--------------------------+

| Job Name | State |

+-----------------------------------------------+

| mccl_test | running |

+--------------------+--------------------------+

3.10.3.3. 查看测试结果

mx-dcmi命令:

mx-dcmi mccl --host [IP]:[PORT] -j mccl_test

操作成功,显示如下:

============= MetaX Data Center Management Interface Log =============

Timestamp : Tue Apr 22 16:43:13 2025

{

"results": [{

"node_list": "10.200.146.226:8",

"perf_type": " all_gather_perf",

"nic_dev": " mlx5_7, mlx5_3, mlx5_2, mlx5_0, mlx5_4, mlx5_6, mlx5_1, mlx5_5",

"iter": "2",

"start_time": "2025-04-22 16:40:07",

"end_time": "2025-04-22 16:41:23",

"min_alg_bandwidth": "296.04",

"max_alg_bandwidth": "296.04"

}]

}

MCCL测试结果会存放在 mxdcmd-log 文件夹下,命名为 mccl_stress_log_x_<pid>.log。

pid为测试任务的进程ID。x为MCCL的测试组数,若有多组测试,将生成同一个PID下的多组测试日志。

若查询到测试任务状态很快为 stopped 时,表明已经出错,可通过结果查看具体出错原因,如下所示:

============= MetaX Data Center Management Interface Log =============

Timestamp : Wed Apr 23 13:14:18 2025

{

"results": [{

"node_list": "10.200.146.235:8,10.200.146.226:8",

"perf_type": " all_gather_perf",

"nic_dev": " mlx5_4, mlx5_6, mlx5_3, mlx5_2, mlx5_0, mlx5_7, mlx5_5",

"iter": "1",

"error": " : Test NCCL failure all_gather.cu:47 'internal error / Proxy Call to rank 15 failed (Connect)'"

}]

}

3.10.3.4. 终止测试任务

mx-dcmi命令:

mx-dcmi mccl --host [IP]:[PORT] -x mccl_test

操作成功,显示如下:

Successfully stopped Mccl diag for mccl_test

再次查询mccl_test状态,显示 stopped:

============= MetaX Data Center Management Interface Log =============

Timestamp : Wed Apr 23 13:13:38 2025

Mccl stress job list

+----------------------+---------------+

| Job Name | State |

+----------------------+---------------+

| mccl_test | stopped |

+----------------------+---------------+

End of Log

3.10.3.5. 删除测试任务

mx-dcmi命令:

mx-dcmi mccl --host [IP]:[PORT] -r mccl_test

操作成功,显示如下:

Successfully remove Mccl diag for mccl_test

再次查询任务状态:

============= MetaX Data Center Management Interface Log =============

Timestamp : Wed Apr 23 13:20:51 2025

Mccl stress job list

+-------------------------------------+

| There is no job |

+-------------------------------------+

End of Log

4. 附录

4.1. 术语/缩略语

术语/缩略语 |

全称 |

描述 |

|---|---|---|

HTTP |

Hyper Text Transfer Protocol |

超文本传输协议 |

RESTful |

Representational State Transfer |

一种接口风格 |

MCCL |

MetaX Collective Communications Library |

一个提供GPU间通信原语的库 |

RDMA |

RemoteDirect Memory Access |

远程直接内存访问 |